I have the bad habit to put to test my new disks for 2 months before moving the original disk to storage and only after another 2 months move/sell/retire the old ones. Recently I buy 2 Seagate Barracuda ST2000DM001 @7200RPM, one is going directly to warranty as never can finish even format, ’cause of bad sector count and UDMA timeouts.

Well as the primary use for this two disk where to replace two older IDE Seagate Disks ST3320620A 320GB @7200RPM, after copying all the old data, I had a lot of free space to test, and after the fiasco with the first disk I wanted to stress this one.

I searched for tools to test the I/O and stress disk, found spew and iozone both very useful, by the way if you want nice graphs and benchmarks go for iozone, but I needed to create arbitrary files of random data and test write and read accuracy, so I tried spew, first filling what free space was left on each partition of the drive… but as you maybe guessing that after the first test is the same pattern and wanted also to try with smaller files, ended making a small script

#!/bin/bash for a in $(seq 20); do for i in $(seq 1 100); do spew --raw -d -o 1m -b 16m 1g /home/D/spew/bigfile$i spew --raw -d -o 1m -b 16m 1g /home/E/spew/bigfile$i echo $i done rm /home/E/spew/bigfile* rm /home/D/spew/bigfile* done exit 0

Also as I’m lazy i running it on screen to let it run for a while and let munin to the graphs and systat to collect I/O usage, also I’m running with the next line:

time sh testdisk.sh 2&>1 >> test.log

So it creates 100GB of files one by one, 20 times… the first run not finished as we had a power outage, but I going to try this for a week or at least until next tuesday that is when I have time to send the other disk to warranty, and if this one fails google’s paper says is going to be sooner than later (at least I hope so).

And by first hand experience a burn test (stress test) serves to show bad components earlier…

Update 15/11/2012

Why change the behavior of spew and not only create a BIG files?, well I wanted sustained stress, and with the cycle, of writing the big file, then checking then deleting I was not what I wanted, so the change of creating”smaller” files, to create sustained activity.

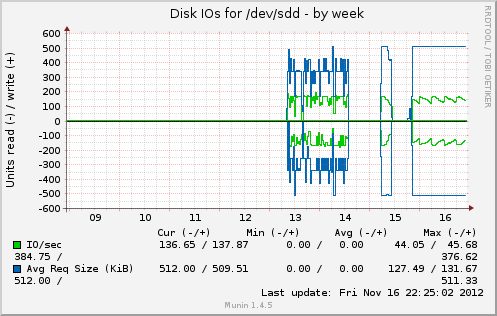

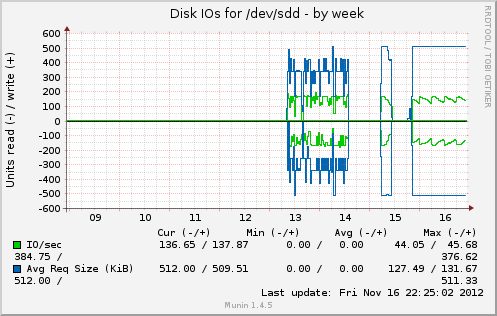

On the image you can see 13-14 the workload of creating, checking and delete big files. 15, the new pattern of smaller files until power outage. And since last night the new pattern, more like I was attempting.

On the image you can see 13-14 the workload of creating, checking and delete big files. 15, the new pattern of smaller files until power outage. And since last night the new pattern, more like I was attempting.

![[RDF Data]](/fct/images/sw-rdf-blue.png)

![[cxml]](/fct/images/cxml_doc.png)

![[csv]](/fct/images/csv_doc.png)

![[text]](/fct/images/ntriples_doc.png)

![[turtle]](/fct/images/n3turtle_doc.png)

![[ld+json]](/fct/images/jsonld_doc.png)

![[rdf+json]](/fct/images/json_doc.png)

![[rdf+xml]](/fct/images/xml_doc.png)

![[atom+xml]](/fct/images/atom_doc.png)

![[html]](/fct/images/html_doc.png)